Note: This had been sitting, unfinished, for about a month while I was trying to find a good banner image to go with it (or, failing that, possibly get one made). Both activities failed, so – just enjoy the post? Please?

In a slight change of pace, I’m going to comment somewhat on a topic which many people seem to completely misunderstand … Artificial Intelligence or “AI” as it’s usually known.

I’ve recently seen and heard lots and lots of jabbering and blathering on about how “AI is going to take over the world” and such – fueled, I’m sure, by movies like The Terminator and The Matrix. As potentially interesting as the concept (in that movie) is, it’s not what anything which currently exists is even close to approximating.

What Does “Artificial Intelligence” Mean?

The common perception of the term usually conjures up the idea of some sort of malevolent machines, thinking of ways to enslave or eliminate humanity, paving the way for the rise of the “Age of Machines”!

Or, alternately, the perception is of a more benevolent and “human” (or human-friendly and human-emotion-seeking) intelligence.

Regardless of the perceived intent, however, the thought always includes the element of “intelligence”, of … consciousness … with intent and volition. An Artificial Intelligence has thoughts and creativity and desires (even if those are potentially foreign to us more meat-based intellects).

The Current Generation of “AI”

Rather than being led astray by the imaginations of science-fiction authors (and screenwriters and other purveyors of fiction), let’s take a look at what the actual technology of the day is.

The current set of tools which are being labeled “Artificial Intelligence” are really nothing more than a bunch of extremely powerful and refined analytical processes. They are no more “intelligent” than an Excel™ spreadsheet or an SQL database. [Yes, it’s possible to be traumatized by extremely complex versions of either one, but the tools themselves are just that – tools.]

Oh, and those tools (AI) happen to be wrapped in something which is able to: 1) process and decompose language – to convert it into a database query; and, 2) process and recompose the output into something which looks more like “natural” language. The same is true for image generation (with a different type of output processing).

This is even true for the analytic “algorithms” which seem to be ever present when you watch a video streaming site or go online shopping.

There are massive (relatively speaking) databases hiding behind those analytical results. And the reason GPUs (like Nvidia, etc) are so needed is because the data processing (computation) to figure out the right queries and grind through of the data really are that intensive.

Really? I Shouldn’t Be Scared?

No matter what anyone might otherwise suggest to you – there is no thought … no volition … no desire … at all, of any sort, hiding behind any of the current generation of AIs. There is also no imagination. AI cannot create something which has not truly existed before. At best, it, can glue together pieces which humans have already created. AI is simply a computer program running on a computer (or a bunch of networked computers) somewhere. Turn the power off and, just like with your desktop computer, or laptop, or phone … it stops doing anything.

This is not to suggest it isn’t useful. I’ll use the “assisted shopping” thing I briefly touched on above, as an example. Could all of the data that goes into it be compiled and analyzed by humans and come up with the same (or even better) results? Absolutely. However, a human can’t do it as fast as a computer process can. A human could, however, probably come with a better understanding of what you’re trying to find than a computational algorithm – because humans can determine (“intuit”) the desired result.

Or, for doing research … anyone can go to a library and do hours and hours (or days and days, or months and months) of intense research … or get a degree (as needed) in some specific field … and come up with the same answers. But a computer isn’t going to extend the boundaries of a field, nor make a startling new discovery which breaks open new areas of study.

Anyone who suggests that “AI is taking over! Run for the hills!!!” does not understand technology and all they’ve ever learned about it is from fiction writers who still believe that “hacking” can be done by writing three lines of a computer program and suddenly, poof, they’ve penetrated into secure systems. It just doesn’t work that way. (That’s another thing that bugs the heck out of me with fiction that talks about “expert hackers” as if they can be found in every other basement. but that’s a whoooole separate topic.)

In the end, AI is just another tool humanity has developed. It is, arguably, much more versatile and flexible than a hammer or a screwdriver … but only so long as the data exists and the power is running. And, let’s face it – if you need to tighten a screw or drive a nail, you’re still going to need those “cruder” tools.

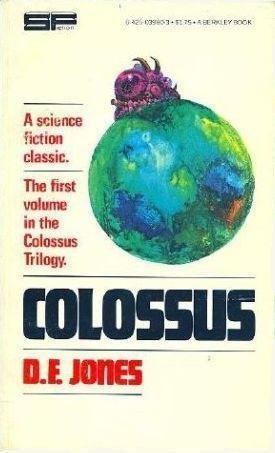

Postscript 1 – Fear of AI Is Not New

One of the first (I believe) works of fiction dealing with the fear of a malevolent machine intelligence was the science fiction novel, Colossus, by Dennis Feltham Jones, from 1966. It was considered such a significant (or interesting?) book on the topic that it was later turned into a Hollywood movie titled Colossus: The Forbin Project, in 1970. By today’s standards, it’s probably viewed as rather campy, but it is certainly a “fun” look at the extent to which humans have a fear of machines taking over, even going back to before the likes of WarGames or The Terminator came to the fore and even more took over our collective consciousness of how much we should fear a malevolent machine intelligence.

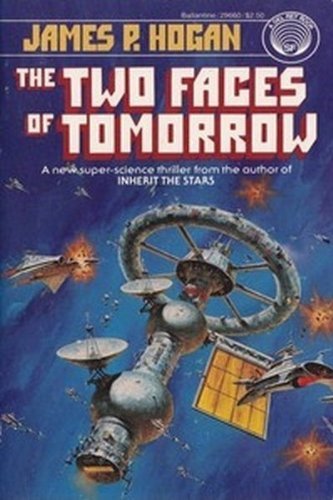

Postscript 2 – A Fun, Semi-Related, Book

Way, way back, around the time it was first published in fact, I read a book which is still one of my favorites. It’s The Two Faces of Tomorrow, by James P. Hogan. I’ve read and re-read it enough times that my copy of it is very well-worn. So much so, in fact, that I bought a second copy in order to make sure my original didn’t fall apart the next time I even glanced at it the wrong way.

In the opening prologue, there is an interesting sequence of events. There is a lunar mass-driver which is being used to send the results of lunar mining down to the earth. Some astronaut surveyors are examining the planned build-site for a second mass-driver. However, there is a bit of a “mountain range” in the way of the intended path of the mass-driver (which, by their nature, need something very straight through which to drive the aforementioned masses).

Reporting their findings from the site, they put in a job order to clear the obstructing mountain out of the way. This is reported to the local (lunar) “smart” (analytical) computer network. The engineers expect this will result in the provisioning and assignment of lots of … “earth moving” machines – such as digging equipment, etc., and the process of job-site preparation will take a long time.

The computer system queries as to the job priority. Unthinkingly, they say it has the highest possible priority. The computer responds that it will be completed in mere minutes. Surprised by the result, the astronauts are sure it is some sort of error … until the mountain starts being bombarded by redirected masses from the existing mass-driver – which was the result of the computer “deciding” the most efficient method for removing the obstruction was to use high-velocity kinetic mass to obliterate it.

The job is, indeed, completed, in the projected time – but with a complete disregard for method or safety, since those were not included in the parameters used to determine how the job would be completed. Needless to say, a human provisioning such removal either would be unlikely to use that method, or, if it was suggested, would ensure the target area was cleared of any possible casualties.

The remainder of the book deals with humans attempting to figure out how to provide for (and manage) the potential malevolence (intended or otherwise) of an “evolving” smart computer system.